What is A2A?

The A2A protocol is an open standard that gives AI agents a shared communication layer so they can collaborate reliably across different stacks, vendors, and infrastructures. It treats agents as autonomous problem solvers and defines a common “language” and contract for them to exchange goals and results, allowing independently built agents from different organizations and frameworks to interoperate as part of one cohesive system rather than isolated silos.

Microsoft’s support for the Agent2Agent protocol marks a step change in how AI agents collaborate across heterogeneous platforms and environments. Announced on May 7, 2025, this move enables both Azure AI Foundry and Copilot Studio to support native agent-to-agent communication, so enterprises can orchestrate sophisticated multi-agent workflows that span multiple clouds and organizational boundaries.

What if the A2A protocol isn’t here to help?

In the absence of a unified A2A protocol, linking different agents reveals a range of practical difficulties for most organizations. What follows demonstrates how these issues create ongoing complexity in agent ecosystems.

Agent Exposure

Developers often end up wrapping agents as simple “tools” so other agents can call them, which goes against how agents are meant to negotiate directly and quietly strips away many of their native capabilities.

Custom Integrations

Every new agent-to-agent interaction typically requires a bespoke, point-to-point integration, adding substantial engineering effort and fragile wiring for each use case.

Slow Innovation

Because each integration is custom-built, adding or changing agents slows teams down, making it harder to iterate quickly on new AI-driven workflows and products.

Scalability Issues

As the number of agents and interactions grows, these one-off links become difficult to scale and maintain, turning the overall system into a brittle web of dependencies.

Interoperability

This integration style keeps agents locked inside platform or team silos, blocking the emergence of a true, cross-ecosystem network of collaborating agents.

Security Gaps

Ad hoc communication patterns rarely apply consistent authentication, authorization, and audit controls, leaving enterprises with uneven and hard-to-govern security.

Why A2A is so transformative

The A2A protocol directly addresses these issues by standardizing how agents discover each other, exchange messages, and coordinate work. With a shared protocol, AI agents can interoperate reliably and securely across frameworks, vendors, and organizations, while engineering teams focus on what the agents should do, not on reinventing the integration plumbing each time.

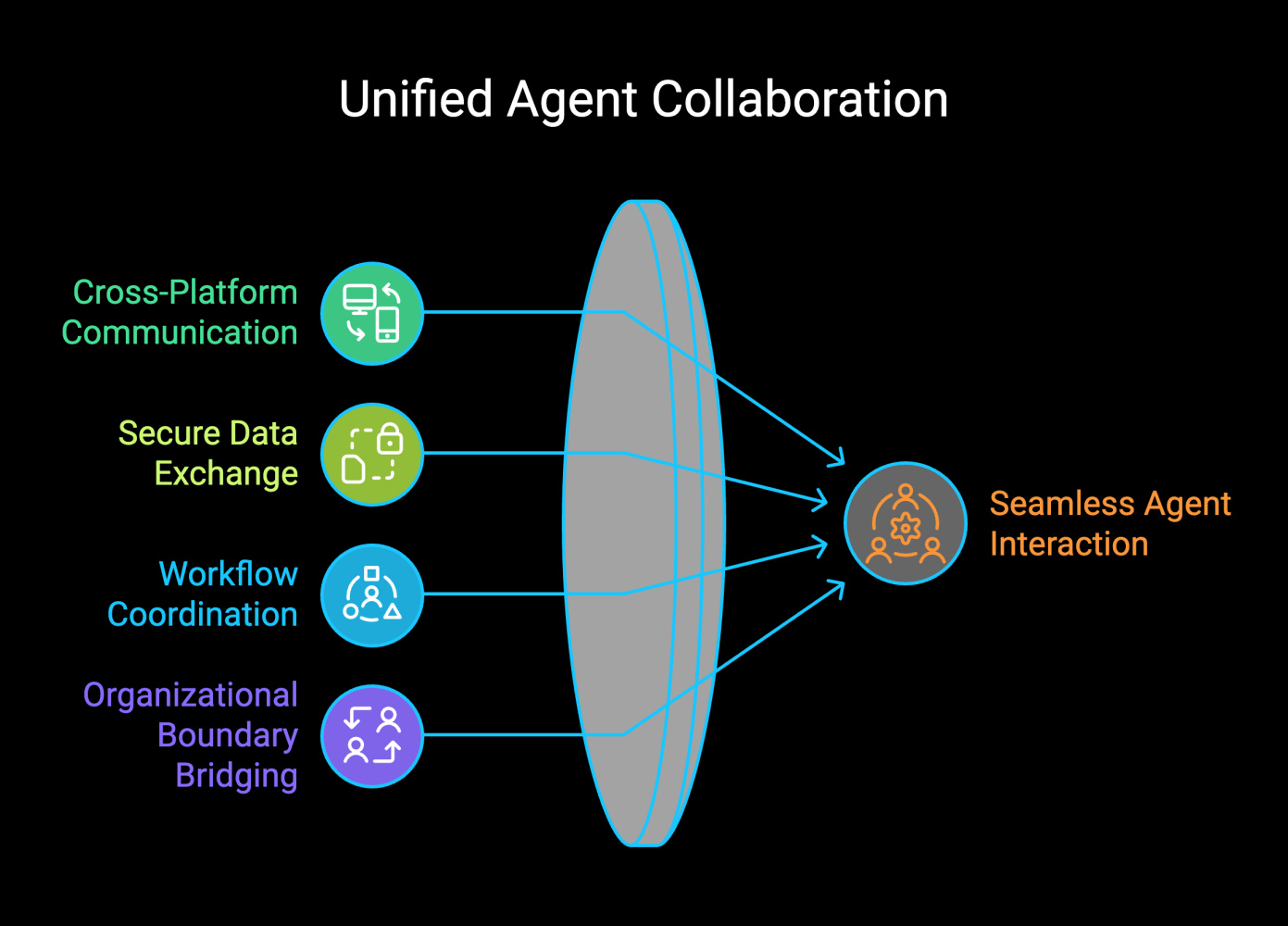

The A2A protocol gives agents a common pattern for how to work together in real systems. It lets them talk across different platforms and clouds, exchange data and capabilities safely, coordinate multi-step tasks, and do all of that across organizational boundaries without breaking security or governance.

"We are entering a new era in business where agents will not only act independently but also work together seamlessly as a team" (Charles Lamanna, Corporate Vice President at Microsoft)

This ability to let agents collaborate in this way is exactly what differentiates A2A from earlier AI integration approaches.

A2A protocol and MCP: What are the differences?

In a modern agent stack, A2A and MCP sit alongside frameworks and models, each solving a different layer of the problem. Models (LLMs) provide the reasoning engine. Frameworks like ADK give developers the SDKs and patterns to build and deploy agents. MCP connects those agents and models to external tools and data. A2A standardizes how full agents talk to each other across organizations and frameworks.

Agent2Agent

A2A focuses on a different layer: communication between agents themselves. Instead of just wiring a model to tools, it defines how agents discover one another’s capabilities, share goals and context, and delegate work based on specialized expertise. Crucially, it lets agents trigger actions across organizational and cloud boundaries while still behaving like full agents, not downgraded tools.

A2A focuses on “agent‑to‑agent” collaboration. Rather than forcing an agent to pretend to be a simple tool, A2A lets agents interact in their native modality as peers or as users. They can exchange goals and context, plan together, and delegate work to one another while keeping their internal state, memory, and tooling opaque. This supports rich, multi‑turn flows like negotiation, clarifying requirements, or coordinating a shared task.

Model Context Protocol (MCP)

MCP is an open protocol that standardizes how AI models access external data, tools, and services. It gives assistants a consistent way to call search engines, databases, calculators, code executors, and similar capabilities using a client–server model, where the AI assistant is the client and an MCP server fronts the underlying resources.

MCP is primarily about “agent‑to‑tool” or “model‑to‑resource” integration. It reduces the complexity of wiring agents to databases, APIs, business systems, and other services. In MCP, these tools are usually stateless and narrow in scope, such as a calculator, a search endpoint, or a database query function. MCP provides a single, open way for agents to call those capabilities instead of bespoke connectors for each system.

You can think of MCP as “USB‑C for AI apps”: it normalizes how models plug into external systems and makes those integrations much easier to build and maintain.

How they work together

In short, MCP is about connecting agents and models to the data and tools they need, while A2A is about letting multiple agents collaborate as peers in richer, multi‑turn workflows. Used together, MCP powers “agent‑to‑tool” access, and A2A powers “agent‑to‑agent” coordination, two complementary protocols for building serious multi‑agent systems.

The two protocols are therefore complementary, not competing. In real enterprise scenarios, you typically need both: MCP for clean access to tools and data, and A2A for scalable, cross‑platform multi‑agent collaboration.

Microsoft’s backing of A2A alongside MCP is a key inflection point for enterprise AI. It enables agents to collaborate seamlessly across products, platforms, and organizations, forming the backbone for more powerful, flexible, and scalable AI systems.

What’s next?

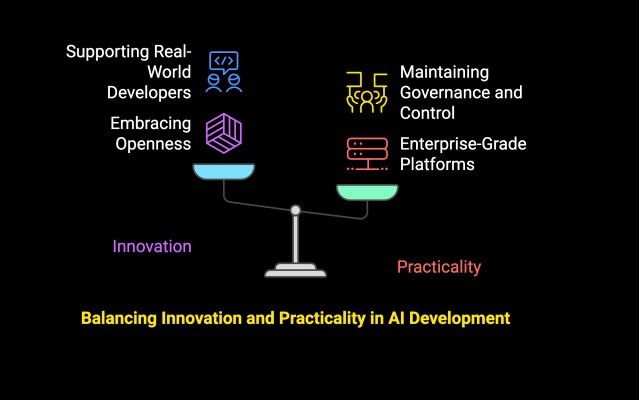

Microsoft has joined the A2A working group on GitHub to help shape the protocol and its tooling. By backing A2A on an open orchestration platform, we’re laying the groundwork for collaborative, observable, and adaptive software where agents operate across apps, clouds, and ecosystems rather than in isolation.

If you want more AI solutions or to learn more information